AI is giving us an opportunity to rethink decision-making in education, work and beyond.

TL;DR: Using AI is like using Google Maps: the technology excels at prediction (analysing data and forecasting routes), but humans must retain the judgement (deciding where to go and if the journey is worth it).

AI should be used to think better, not to avoid thinking. Success in the 21st century comes from knowing how to decouple these two functions so you remain in the driving seat.

Here’s how.

Do you use Google Maps or Waze to get around?

The second question is trickier. Do any of you feel guilty about it? Do you worry that using Google Maps means you’re “cheating” at navigation, or that you’ll never develop a proper sense of direction?

Of course not. That would be silly.

Yet when it comes to using AI for decisions, I see people paralysed by exactly these fears. This ranges from choosing what to study to planning a career move to even planning an article. “Is this cheating?” “Will I lose my critical thinking skills?” or “Am I even thinking for myself anymore?”

Through my experience teaching AI at the University of Malta, building projects and advising business and public entities, I’ve spent the past two years observing this tension play out across sectors. I’ve seen this in classrooms, boardrooms, and casual conversations with friends as they try to figure out their next steps.

Today, I want to offer you a different way of thinking about AI that I constantly share with my students, clients and friends. I hope this will transform how you make decisions by leveraging today’s accessible tools.

The Decision-Making Decoupling Effect

Let’s break down what Google Maps actually does. It analyses vast datasets about roads, traffic patterns, routes and user data. It identifies the optimal path based on current conditions and forecasts how long it will take. Then it gives you turn-by-turn directions. It understands/reads the environment, makes calculated predictions and presents them to you.

But here’s what Google Maps does not do:

- It doesn’t decide where you’re going

- It doesn’t determine whether that destination is worth visiting

- It doesn’t make the journey meaningful

- It doesn’t understand that you’re avoiding that route because of a difficult memory

These are all matters of judgement, and that’s on you.

We use this technology constantly, but we don’t outsource our entire lives to it. We still decide where to go. What do you do when it suggests a left turn, and that turn is actually a no-entry? We still override its suggestions when we know better. We still sometimes choose the scenic route, even when it’s slower.

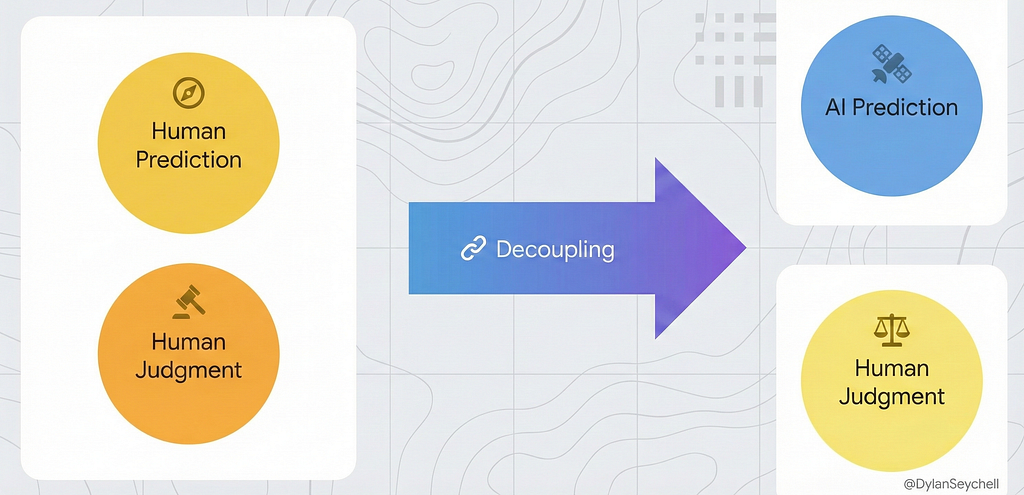

In essence, this provides humanity, the commercial world and society with an opportunity to decouple prediction from judgment. This idea builds on the foundational notion of economists Ajay Agrawal, Joshua Gans, and Avi Goldfarb in their book Prediction Machines: The Simple Economics of Artificial Intelligence (2018; updated editions available).

Google Maps (AI) handles prediction, including analysing patterns, identifying possibilities, and forecasting outcomes. On the other hand, we hold the judgment part. This involves applying context and experience, considering our values, employing intuition, and making strategic decisions that matter to us.

This is precisely how we should be thinking about AI in every area of our lives.

The Pattern is All Around Us

Once you see this pattern, you’ll notice it across nearly every AI application. Below, I am sharing a few from my experience.

In healthcare, AI can analyse medical scans and predict which lesions are likely to be cancerous with high accuracy, as shown in our research at The University of Malta. However, AI cannot decide whether a patient should undergo aggressive treatment, factor in their quality-of-life preferences, or weigh the emotional impact on their family. That requires human judgment from medical professionals who know the patient’s full context.

In recruitment, AI can screen thousands of CVs and predict which candidates have experience that matches a job description. But it cannot assess cultural fit, recognise unconventional brilliance, or decide that sometimes the “riskier” hire is precisely what a team needs. That requires human judgement from people who understand the organisation’s actual needs. Recruiting people is not like choosing your next laptop. You cannot benchmark specifications, and even worse, you cannot automate them. This deserves its own blog, but that’s for another day.

In finance, AI can analyse market patterns and predict which investments might generate returns. But it cannot determine your risk tolerance, understand what you’re saving for, or account for your preference not to invest in specific industries. That requires you to assess your own values and goals.

In creative work, AI can generate images, write text, and compose music. But it cannot decide what’s worth creating, what message matters, or what will resonate with a specific audience. That requires human judgment about meaning and purpose. This judgment is, in other words, an expression, and it is my interpretation of what fundamentally defines art.

The pattern is consistent, and additional case studies beyond the above list show that AI excels at prediction while humans excel at judgment.

Why This Matters Now

We’re living through a strange moment. AI capabilities have expanded so rapidly that we haven’t developed effective cultural frameworks for using them. I subscribe to the view that AGI, or superior intelligence, has already been achieved by AI in specific areas. Society seems to be stuck oscillating between two equally unhelpful extremes:

Extreme 1: AI as Threat and “AI will replace us all. We need to ban it, avoid it, prove we can succeed without it.”

Extreme 2: AI as Saviour and “AI will solve everything. Just let the algorithm decide. Human judgment is biased and flawed anyway.”

Both of these are, well…extremes and thus unreasonable nonsense.

The reality is far more interesting and pragmatic. AI is a tool that makes specific tasks dramatically more efficient, but only when used by humans who understand both its capabilities and its limitations.

This isn’t a new pattern. We’ve watched this movie before:

- Calculators didn’t make mathematics obsolete. They freed mathematicians to tackle more complex problems. Because of calculators, we probably do not compute long division or multiplication by hand. Still, it also frees up effort in, say, a physics examination, allowing us to assess students on, for example, 5 topics instead of 2, because students have to focus on the physics aspect rather than long multiplication or division.

- Spell-checkers didn’t make writing skills irrelevant, but they freed writers to focus on ideas rather than typos. These tools have evolved dramatically, and today Grammarly helps us refine our writing and learn more about ourselves and our writing style.

AI is the next iteration of this pattern. It goes beyond calculators and spell-checkers because it is more powerful and more general-purpose. It is also capable of learning patterns from the data we feed it and generating new ones from the same data. This opens a whole new world of possibilities…and challenges.

How Does it Play out in Practice?

Let me make this concrete with a simple example.

Imagine you’re working on any kind of written document…an essay, a report, an email that matters. You draft it, but you know it needs work. The ideas are there, but the writing needs refinement.

You could ask AI to “write this for me” and submit whatever it produces. That’s outsourcing both prediction and judgement. You haven’t learned anything.

Alternatively, you could write your own draft first and then ask AI: “Review this and identify where my argument is unclear or my language is imprecise”. Follow up by critically reviewing the AI’s feedback and deciding which suggestions to accept and how to implement them. Take ownership of judgement and make your own final decisions about what your document should say.

In the second approach:

- You write the original ideas (judgement)

- AI identifies potential weaknesses (prediction)

- You make the final decisions (judgement)

The difference is not about whether AI was used. It’s about whether you remained in the decision-making seat.

The Skills We Actually Need

If AI is going to be our constant companion, and it is there for us to use it, we need to develop new fluencies.

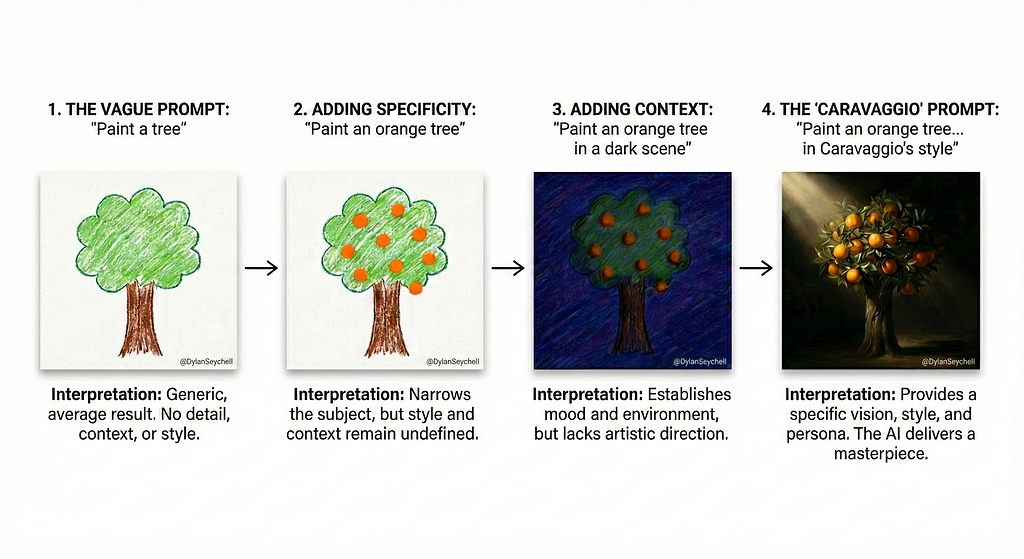

1. Asking Good Questions is critical. This is called prompt engineering. Think about describing a painting to an artist. You could ask an artist, “Paint a tree”, or “Paint an orange tree in a dark scene using Caravaggio’s style”. The more specific we are, the better the output.

- Poor prompt: “Tell me about marketing”

- Better prompt: “I’m launching a small tourism business in Malta. What are three low-cost marketing strategies I should consider, and what are the pros and cons of each?”

2. Critical Evaluation is critical when using AI tools. AI sounds confident even when it’s wrong. It is designed to please its users and optimised for smooth, plausible conversation, not for factual ones. It is therefore essential that we check sources and question assumptions. I always advise testing suggestions against your knowledge or running Google searches as needed.

3. Ethical Judgement is not just about good use of systems. It is also about their safe use…for you and those around you. It is essential to know when not to use AI at all. Sometimes, decisions involve deeply personal values, when transparency isn’t possible, when the struggle itself is valuable. In those moments, realise that it is not a good idea to share such thoughts with a machine that mimics empathy/emotions without meaning it and is doing its best to keep you engaged in conversations that are not leading anywhere close to the solutions you need. There are always people ready to help you. Be strong and fetch help from other humans, not a self-serving machine.

The Bottom Line

The difference comes down to this: Are you using AI to avoid or subcontract thinking, or to think better?

When you use AI to:

- Understand something you’ve struggled with → Thinking better

- Get an answer you use without verification → Avoiding thinking

- Generate options you evaluate critically → Thinking better

- Accept the first suggestion without questioning → Avoiding thinking

The pattern is clear. AI used in the service of your thinking is valuable. AI used as a replacement for your thinking is intellectual self-sabotage.

Success in the 21st century is not about proving you can function without AI. It is about proving you can function wisely with AI, and that’s a far more interesting challenge. So use the tools.

Just make sure you’re still the one doing the driving.

Dr Dylan Seychell is a resident academic in the Department of Artificial Intelligence at the University of Malta, specialised in Computer Vision and Applied Machine Learning. Dylan co-founded companies in the tourism and cultural heritage sectors and is the deputy chair of the Tourism Operators Committee in The Malta Chamber. He is involved in Malta’s National AI Literacy Programme, “AI Għal Kulħadd,” and leads R&D projects in News Analysis and Environmental Management.

Have thoughts on using AI for decision-making? Connect with me on LinkedIn.

Why Does Using AI Feel Like Cheating when It’s Actually Like Google Maps? was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.