And actually wants to answer 🙂

Imagine clicking “submit” on an online survey, not with a sigh of relief, but with a sense of empowerment. Your answers, joining a symphony of over 200 million others collected daily, hold the potential to shape products, services, and experiences for millions worldwide. That’s the power of online questionnaires, a tool embraced by businesses all across the globe to collect valuable insights and drive decision-making.

However, crafting a survey that captivates attention and delivers meaningful data is a complex task. It’s a delicate balance of science and art, where a single poorly worded question can send response rates tumbling by 20%.

Instead of just diving into dry theories, let’s make things real! We’ll put the ideas we explore into practice with a survey I designed for my Human Factors course. This survey, titled “Learning Management Systems (LMS) Accessibility Feedback Survey,” focuses on understanding users’ experiences, opinions, and judgments about the accessibility features of different LMS platforms.

Starting from the basics

What’s the Aim of the Survey?

This survey aims to understand the user’s experiences, opinions, and judgments regarding the accessibility features of various LMS platforms.

Rephrased the survey aims to be more action-oriented & engaging for our example—

Take the LMS accessibility survey! Your feedback is crucial in our quest to create accessible learning experiences for all. Share your thoughts in this short survey and help us break down barriers in online education.

🎯 Stating the survey aim builds trust, focuses responses, motivates participants, and ensures relevant data. It’s transparency and good research practice.

Who are the participants?

⤷ Why filter the audience?

- Accurate Data — Only get data from the people most relevant to your research question.

- Efficient Analysis — Saves time and resources by focusing on the responses that matter.

- Meaningful Results✨— Gain a deeper understanding of their experiences and opinions, leading to more actionable conclusions (The whole point of doing this exercise is to extract this!).

In our study, our target participants are individuals aged 18+ currently involved with any educational institute online or offline in any capacity. We specifically seek users who regularly use LMS, either on their own or through a platform provided by their institutions.

⤷ How do you filter effectively?

- Define your target audience — Be specific about demographics, behaviours, or other relevant characteristics.

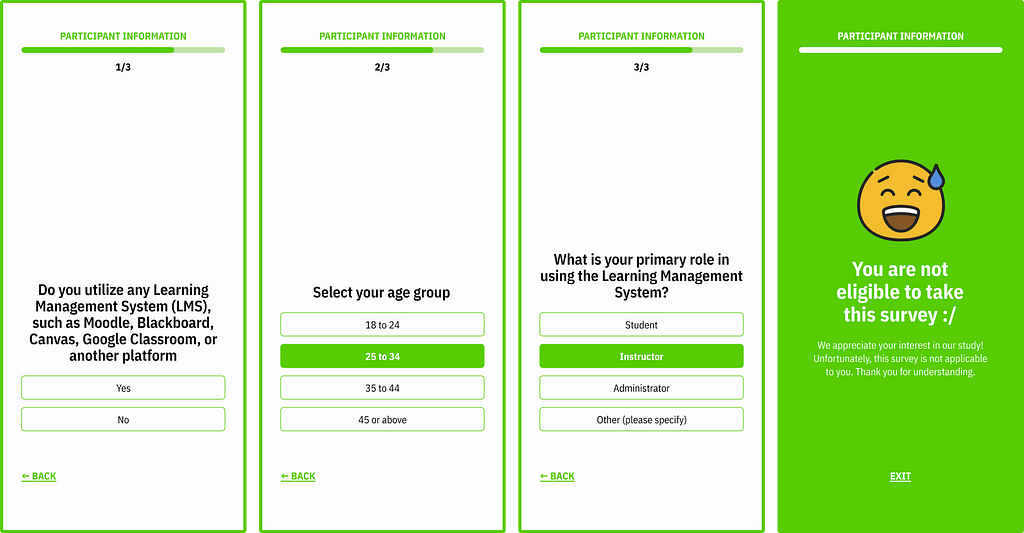

- Use screening questions — Other than being clear about who should participate in the survey while introducing it to people, ask questions at the beginning of the survey to identify and exclude ineligible respondents. In our case, these were the 3 questions we asked —

Additional introductory information you should provide

It’s a recommended practice to provide participants with a brief explanation of what to expect from the survey and an estimate of how long it will take to complete.

Survey Design

Just like any human-centred system, a survey involves cognitive aspects that UX professionals need to understand to design an engaging survey.

Survey flow

⤷ Start Broad, End Specific — Begin with general usage patterns, then delve into details like features and training.

⤷ Logical Progression — Build on each question’s context, avoiding jumps and maintaining a natural flow.

⤷ Challenges Before Specifics — Explore user challenges before asking about features or training effectiveness.

⤷ Demographics Later✨ — Keep personal info towards the end. (Not a typo) Placing personal questions later enhances engagement, reduces bias, and unlocks more authentic responses. Respondents feel less scrutinized, leading to richer, more reliable feedback².

⤷ Open-Ended Conclusion — Allow respondents to share thoughts and suggestions beyond pre-set options.

Our survey is structured in a way that makes sense, moving from general LMS use to more specific topics like accessibility features and training. This design follows good questionnaire principles, providing a structured and organized approach.

🎯 A well-designed questionnaire guides users through a logical progression, minimizes distractions, and gathers valuable insights (Schwarz N, 1999).

Let’s understand how our participants understand a question!

Understanding a question from the respondent’s perspective involves 3 main aspects: Pragmatic Meaning, Response Alternatives, and the Nature of Previous Questions¹.

⤷ The Pragmatic Meaning of a question refers to how the question is practically understood and interpreted in real-life situations. It’s about how the wording and structure of a question influence the respondent’s understanding and, consequently, their answers.

🎯 Our goal is to make sure the questions are clear, relevant, and easy for people to respond to based on their everyday experiences.

An example —

❎ Don’t —

What is the frequency with which you engage in the utilization of the Learning Management System(s) for the purposes of educational interaction and knowledge acquisition within the digital learning milieu?

✅ Do —

How often do you use your Learning Management System(s)?

Words and phrases that seem ordinary in our everyday conversations may only be familiar to some participants. Therefore, it’s essential to construct questions to ensure widespread understanding.

⤷ The Response Alternatives provided to answer a question affect the response. People interpret questions differently based on choices¹.

For instance —

Question — How frequently do you use your LMS?

Options set (1)— Frequently, Occasionally, Rarely, Never

Options set (2) — Daily, Weekly, Monthly, Rarely, Never

🎯 Options set (2) provides a clearer scale, which can lead to more accurate responses, as people are less likely to round their answers to fit the available categories in Options set (1).

Another example—

Question — What is your opinion on immigration?

Options set (1) — Immigrants contribute positively to society, Immigrants take jobs away from citizens, Immigrants increase crime rates

Options set (2) — Immigrants have a positive impact on the economy, Immigrants can strain social services, Immigrants bring diversity to communities

🎯 Options set (1) presents options that are more likely to evoke negative stereotypes, while Options set (2) presents options that are more neutral and balanced.

🎯 When memory is hazy, people use provided options as cues to reconstruct their past experiences. The way options are presented significantly impacts their judgments and chosen responses.

For example —

Question — How often do you come across accessibility features while using your LMS?

Options set (1) — Never, 1–2 times, 3–5 times, More than 5 times

Options set (2) — Very Often (more than 10 times a month), Often (6–10 times a month), Sometimes (3–5 times a month), Rarely (1–2 times a month), Never

Asking someone to choose between broad categories like in Options set (1) can be challenging, as they might not have actively tracked those encounters. Providing more specific ranges, such as in Options set (2) can help them align their memory with a more accurate category, leading to more reliable responses. Options set (2) also uses frequency in months, potentially making it easier to relate to a longer timeframe compared to just the LMS usage.

⤷ The information that immediately comes to mind while answering a question (Nature of Previous Questions) affects how they respond. If the survey questions prompt positive thoughts, their responses may be more positive, and vice versa.

When answering survey questions, individuals bring to mind certain thoughts or experiences. Some of these thoughts are always on their minds (chronically accessible), while others may come up due to specific survey questions (temporarily accessible).

Rating Scales

Imagine you’re giving out stars for a restaurant review. A positive unipolar scale is like giving stars from 1 (Very Dissatisfied) to 5 (Very Satisfied). It’s all about how good it is, without worrying about bad stuff.

This is a good way to ask questions in surveys because² —

⤷ It’s simple — Everyone understands that more stars mean better.

⤷ It’s clear — People focus on just one thing (like satisfaction) without getting confused.

⤷ It’s easy to analyze — We can quickly see what people liked most and least.

🎯 So, next time you make a survey, think about using positive stars like 1–5 for rating questions. It’ll make things easier for you and everyone who answers!

Confidentiality in surveys is key!

Maintaining participant confidentiality within a survey is more than good practice; it’s a cornerstone of ethical research methodology. By providing assurances of anonymity, researchers achieve several crucial benefits¹ —

⤷ Honest data — Assured anonymity encourages truthful responses, boosting data reliability.

⤷ Higher participation — Trusting that answers won’t be traced back to them entices more people to participate.

⤷ Reduced bias — No pressure to conform leads to less biased and more accurate reflections of opinions and experiences.

⤷ Strengthened trust — Protecting privacy demonstrates ethical practices, building trust with participants for future research.

🎯 Therefore, ensuring participant confidentiality is not just a recommendation, it’s an essential component of responsible research.

🚀 Boost Your Research: In a Nutshell

Craft a survey that’s an engaging conversation, not an interrogation. Start with a clear “why,” target the right participants, then guide them through a logical flow with everyday language and star-studded ratings. Save demographics for later, respect their time, and voila! You’ve brewed a potent data elixir, brimming with rich insights and a dash of participant satisfaction.

References

- Schwarz N. Self-reports: How the questions shape the answers. American psychologist. 1999 Feb;54(2):93.

- Rattray J, Jones MC. Essential elements of questionnaire design and development. Journal of Clinical Nursing. 2007 Feb;16(2):234–43.

Just wanted to thank you for reading the whole shebang. Seriously, major props to you for sticking with it. Your thoughts would be pure gold to me, so if anything jumped out at you or you have a different take, please drop a comment & remember to 👏🏽 if you found this helpful, it helps others find it.

Questioning the Questions: How to design a survey that nobody hates was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.