This strategic UX insight might have been one of the reasons why OpenAI decided to become a platform company rather than a solutions company.

Authors: Zsombor Varnagy-Toth is a UX Researcher and author of “A Knack for Usability Testing.” Nedda Nagy is a UX Designer. They both work at SAP Emarsys, but the views and opinions expressed here do not necessarily reflect the position of their employer.

Why is this topic important?

AI enthusiasts often portray the AI chat as the one user interface that is going to revolutionize how users interact with computers. They talk about it as the only UI that we ever going to need going forward.

That is not true. In a previous article, we showed how forms have better usability than conversational UIs. Now, we would like to show how Editor UIs have better usability than conversational UIs in many interactions.

Here is our prediction for after the AI chat craze has settled:

Conversational AI is not going to replace traditional user interfaces. The predominant design pattern will be traditional UIs and conversational UIs used side-by-side.

Conversational UI will be just another UI component in our toolkit that we may or may not include in our designs, depending on what works best for the use case.

Editor UIs’ unfair advantage

The essence of modern editor user interfaces is that they visualize the object that the user is working on. That object may be a piece of text, a piece of art, a webpage, a finance spreadsheet, or anything.

Visualizing the manipulated object has major advantages.

Advantage #1: An overview helps decide the next step

Users often have an idea of what they want the object to look like in the end. When they look at the current state of the object, they can tell what needs further adjustments.

Advantage #2: Externalized memory makes us smarter

Visualizing the object also eases the cognitive load on users. They don’t need to keep the entire object in their mind with all of its details because they can easily refresh those details whenever they need it by simply looking at the visual representation.

If there was no visual representation of the object, users’ workflow resembled more like playing chess in their minds. Not only having to calculate the next step but also having to keep the state of the game in their mind. That just takes up a lot of the mental capacity and results in dumber chess moves.

Advantage #3: Closing the loop

When the object is visualized the user instantly sees the results of their manipulations, helping them change course if needed. Imagine working on a piece of art and trying different colors. You can only tell whether a color works when you apply it and see the result.

Advantage #4: Pick parts to manipulate

An object (a text, an image, a webpage, etc…) has many parts that can be manipulated. A visual representation of the object provides an easy way to click and select the part that you want to manipulate. E.g. selecting a sentence to be rewritten, picking a certain part of the image to be removed, etc. With an editor UI, this is a simple click.

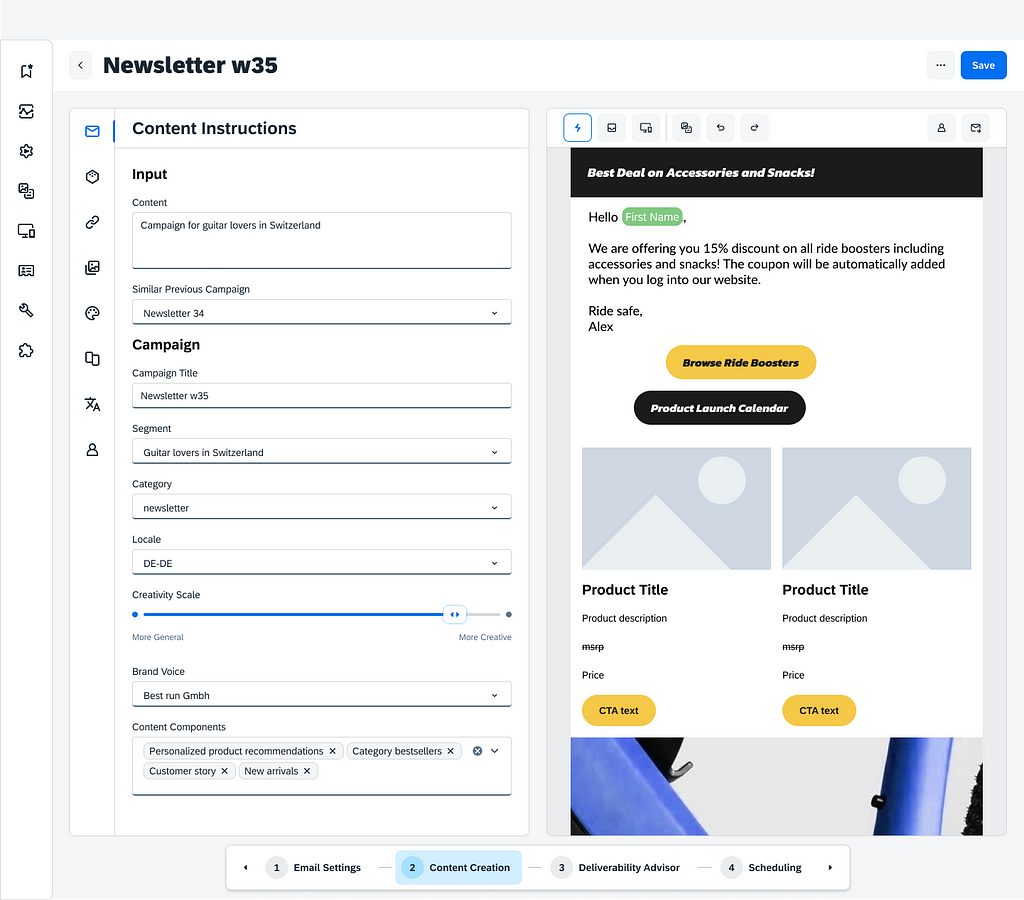

Conversational UIs vs Editor UIs

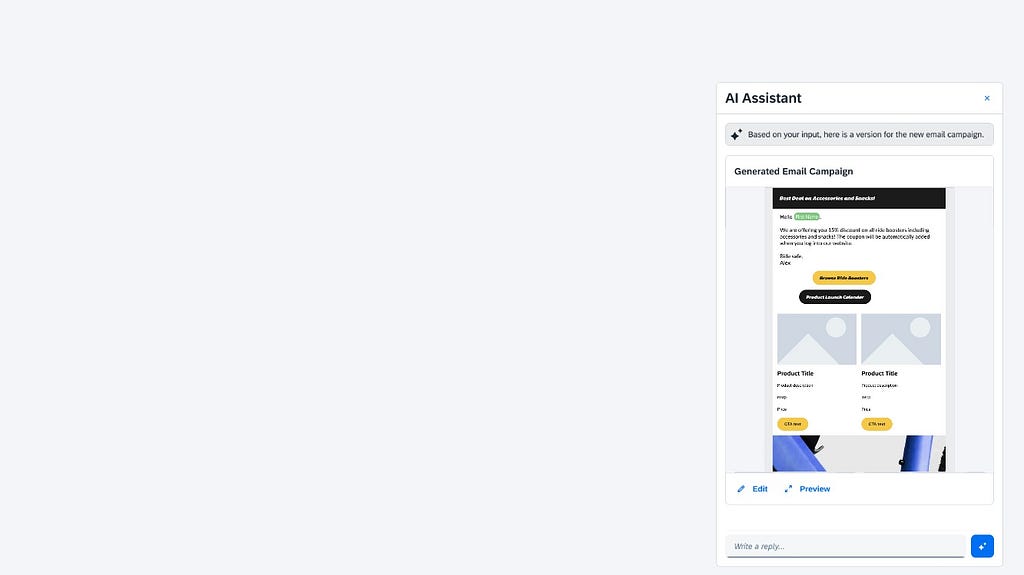

If a conversational UI provides a visual representation of an object at all, it’s inferior to an editor UI for the following reasons.

The way AI assistants are typically designed, they are confined to a mobile screen-sized area, so they can only show a miniaturized version of the real thing. This means they either don’t give a good overview, or they don’t show enough of the details.

Due to the limited screen space, a typical AI assistant doesn’t offer direct manipulation of the object. The visual is read-only. You can’t click on the object and change things.

The primary way to interact with the object is through the conversation. You need to type out what you want to change. For recurring interactions, it’s way easier to click a button in an editor than typing out a command again and again for the AI assistant. But the toughest part of the conversational interaction is to reference parts of the objects using language. Imagine wanting to modify a sentence in a document, but instead of clicking on the sentence, you needed to say which sentence you meant and what to change about it.

Having to manipulate the object via the conversation has one more negative side effect: the object you manipulate slowly but surely scrolls out of view.

Prediction: conversational UI to complement editor UIs

While conversational UIs have some great things to offer (such as shortcutting 1000 interactions with such a simple sentence as “Make this doc more formal,”) they simply can’t replace editor UIs because users do need a full visual representation of the object as they are working on it. A representation that gives an overview while also showing enough of the details. A representation that offers direct interaction with the object and an easy way to pick and select parts of the object. A representation that remains on the screen while the user interacts with it.

This leads us to our conclusion.

Conversational AI is not going to replace editor UIs. The predominant design pattern will be traditional UIs and conversational UIs used side-by-side so they can be used in an interleaved fashion.

This pattern will let the editor UI do the things it does the best and let the conversational UI do the things that does the best.

Picture this illustration of an interleaved workflow in a doc editor:

- Conversational UI: The user types “Give me an outline on topic X.” The machine generates the outline.

- Editor UI: The user selects Chapter 3, and cuts and pastes it in front of Chapter 1.

- Editor UI: The user changes the wording of Chapter 2. Selects this chapter then…

- Conversational UI: …asks the assistant to “Explain this point in 200 words.” The assistant drafts a paragraph just below the chapter heading.

- Editor UI: The user reviews the AI-generated section and injects their own thoughts into it. Removes what they don’t like. Then selects the entire paragraph and…

- Conversational UI: …asks the assistant to “Find a good example for this point in big tech.”

It’s easy to see how each of the interactions is best done in either the editor UI or the conversational UI but not the other way around. The user truly needs both kinds of UIs to get the best experience.

This is why we think conversational AI should be seen as another UI component rather than a standalone AI assistant. It can complement traditional UIs but it can’t manage entire workflows end-to-end.

ChatGPT is a complementary UI component too. It just doesn’t know about it.

We think that conversational UIs are best when they are integrated into the context of various other kinds of UIs, so they complement each other.

You might think that ChatGPT is a counter-example to that. It appears to be a standalone AI assistant that can help users get jobs done end-to-end.

That is just not the reality of most use cases. Think of writing an article using ChatGPT. The user can do the first few steps in ChatGPT but then needs to take the draft into another tool to make edits, apply formatting, etc. The user may even take the content back again to ChatGPT for certain edits but then take it back to an editor. ChatGPT is only serving some interactions in the workflow. The rest of the interactions are so unbearable in the conversational UI that users rather copy-paste the text back and forth than stick with ChatGPT throughout the entire workflow. All in all, if we look at the end-to-end workflow, ChatGPT is not up for the job in and of itself.

This UX insight may have played a crucial role in OpenAI’s decision to try becoming a platform company. They must have realized that for most jobs an AI chat just can’t provide an acceptable level of user experience. They may have had two options to choose from:

(A) They try to compete for end-to-end use cases against established software companies that already have purpose-built UIs for those use cases. OpenAI would have needed to develop the domain knowledge and then those purpose-built UIs to be competitive, whereas the software companies only needed to add a conversational AI component to their existing UIs.

(B) They don’t compete for any end-to-end use case but rent their tech out to software companies that can embed conversational AI into their existing UIs. This way OpenAI can still get a slice of the pie rather than get out-competed.

— — —

These insights didn’t come to us out of thin air. They are the results of in-depth usability testing. While most companies think of usability testing as a low-level tactical tool, if you do the testing right you can unlock revelations of strategic importance. Check out Zsombor’s book, A knack for usability testing (book and ebook,) on how to do that.

Have a great day!

Will AI chat replace legacy UIs? Unlikely for editor UIs. was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.