A UX case study on making multilingual music more meaningful.

My Role

With this project I identified a missing opportunity, hence on top of my end-to-end design ownership, I took on product responsibilities (wrote my own PRD, with AI help).

Trailer before the Movie

Imagine listening to a beautiful Punjabi or Spanish song but missing the meaning.

What if YouTube Music could translate lyrics for you in realtime — without breaking the vibe?

Here’s the core experience — from triggering translation to how it integrates into the player. Deep dives on user research, configurations, and edge cases follow.

Now let’s dive deep into the process. KPIs have been captured at the end.

Why This Problem?

I listen to music every day, and often find myself drawn to songs in languages I don’t understand. To get their meaning, I had to Google lyrics or watch YouTube translations — pulling me out of the moment.

That made me ask:

What if lyrics translation happened within the listening experience itself?

Is This a Real Problem?

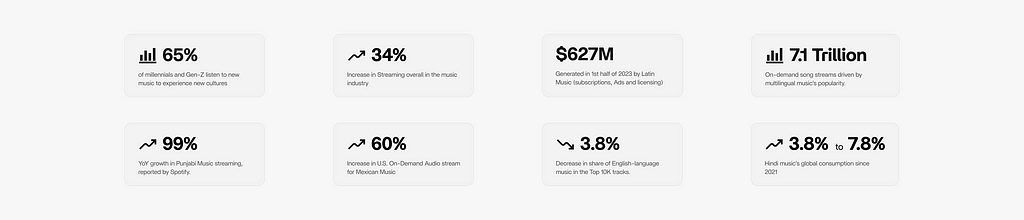

Market Research

I wrote a brief PRD for myself based on the research, you can refer to it here, or just take a look at my market research summary:

My Hypothesis

To enjoy their favorite songs in non-native languages, users would love to have optional — realtime lyrics translation.

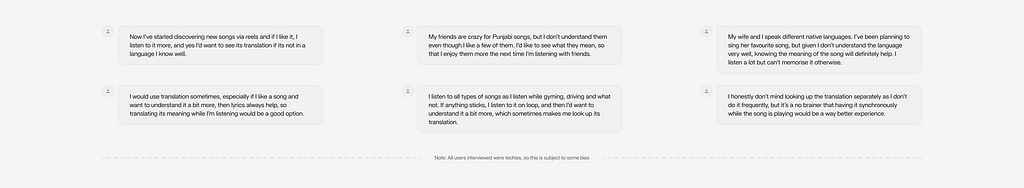

User Interviews for Understanding in Depth

I interviewed 6 users to understand 1st hand about their music listening experience.

Criteria for recruitment: Users should be interested in songs in non-native languages, especially ones that they don’t completely understand. Here are the major takeaways and excerpts:

Understanding user listening contexts (e.g., active discovery vs. passive listening) was crucial in forecasting the adoption and impact of real-time lyrics translation on user engagement.

Problem Statement

Multilingual music listeners need real-time translation of lyrics because it enables them to understand and connect with non-native songs during playback, enhancing their overall listening experience.

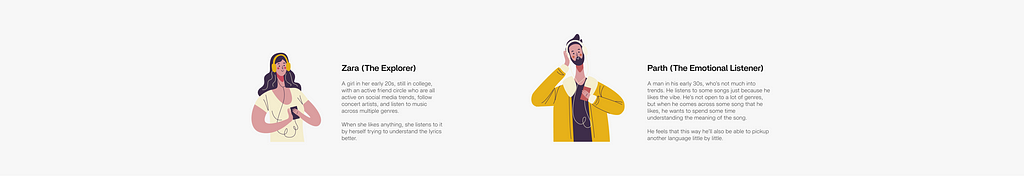

For whom?

Based on the limited research, I created the following lightweight personas:

Use case details

Why only favorite songs?

Users don’t look for translation in every song from a non-native language.

Sometimes they connect with a song and want to understand and experience it during playback, and that’s when knowing the meaning would be of real help.

In most cases, they wouldn’t open the translated lyrics unless the song has something unique in terms of trends, emotional relevance etc.

What kind of Translation?

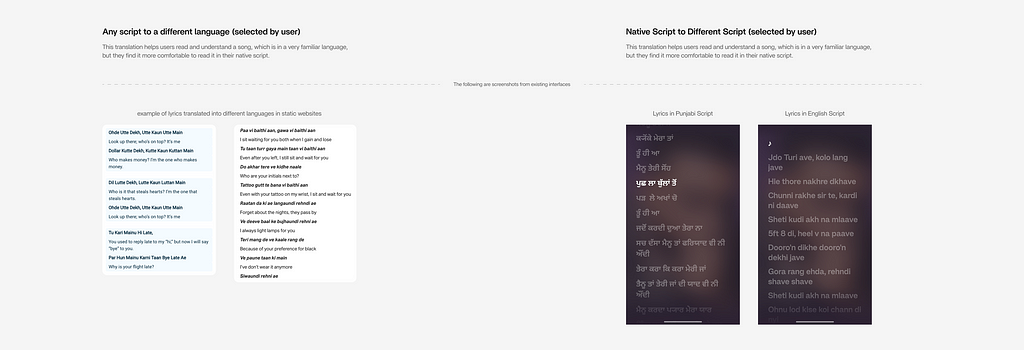

Script vs Language: Both full translation and transliteration (e.g., Hindi in Roman script) are valid use cases.

Should Translation Always be On?

Big NO — it’s a complementary feature for very specific sets of users with explicit use cases.

The settings can be modified so that the users can turn it on by default for any genre of songs that they want, but otherwise it would be manually triggered.

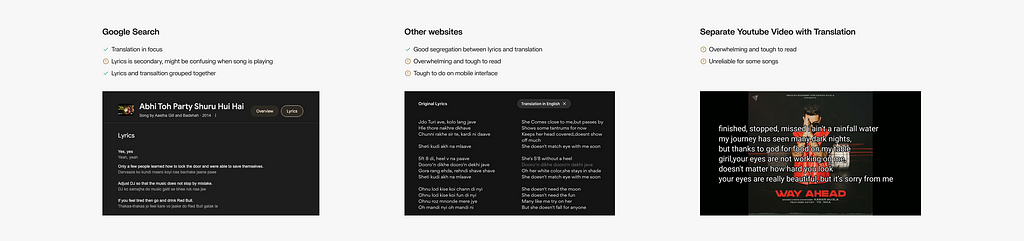

I looked at Existing Solutions…

The apps don’t support it today so there must be some way that users achieve the same goal. So I checked the most frequently used ways to read translation.

Ideation and Low-Fidelity Exploration

I started with paper sketching and asked myself some key questions:

- Where should the translation appear?

- How should users trigger it?

- What’s the right balance between lyrics and translation?

Concept Testing

To move swiftly, I wanted to validate the progress with the users.

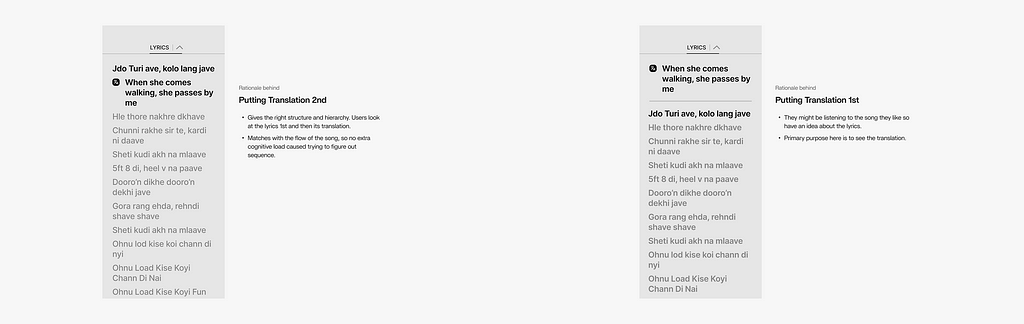

1 data point that I needed an answer to:

– Show lyrics first, then translation, or the reverse?

Major Learnings to Incorporate

I tested the prototype using Figma mirror, again with the same 6 users, and here’s the key takeaway:

Users want to see original lyrics first. Translation should follow to preserve structure and rhythm.

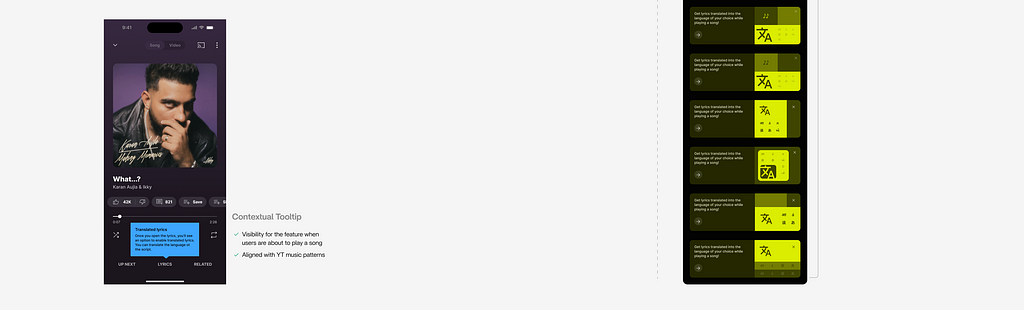

Designing for Discoverability

Before finalizing the core product, I explored banner designs to announce this feature in-app.

The feature demo video will need some more conditions to be defined to ensure the users see the demo in a genre that interests them the most.

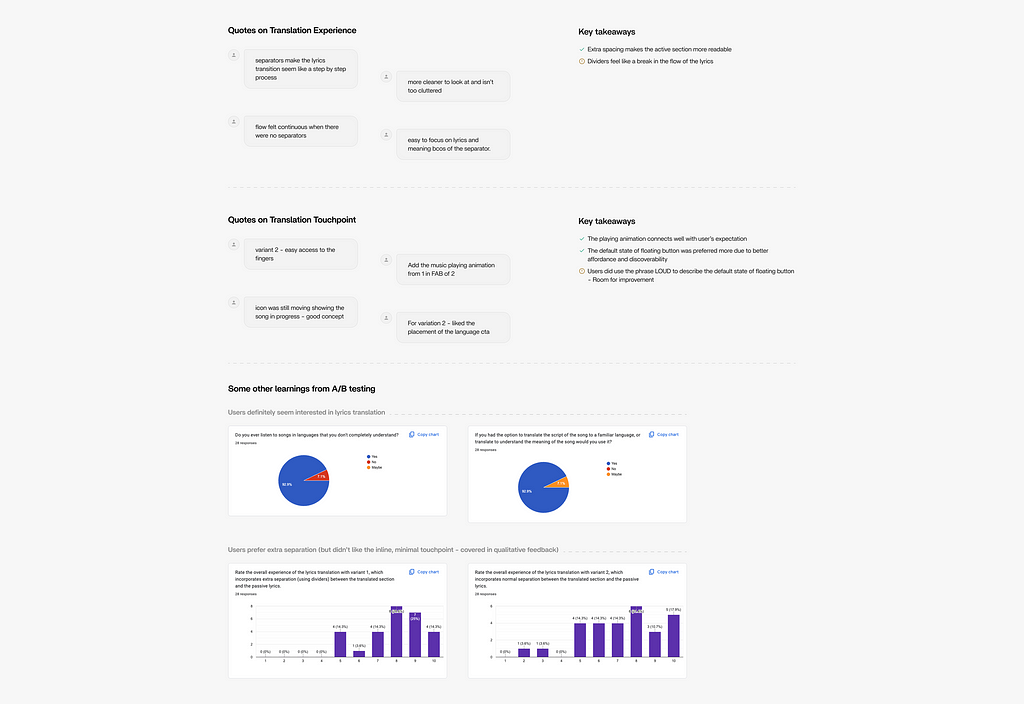

A/B Testing with 28 Users (Using Figma Mirror)

Criteria: Open to all music lovers, not just multi-lingual music lovers. (Wanted to see how different the reaction would be since the feature would be available to everyone)

Things to be tested (2 Versions — 4 variations):

- Preference of translation with separation vs without

- Preference of translation touchpoint being a floating button vs an inline minimal version

After testing, I asked them to fill out a Google form to help answer a few qualitative and quantitative questions.

Reading between the lines

Once I went through all the feedback in detail, I understood the following that the users want:

- Something that’s easier to read with more spacing, but doesn’t break the flow with dividers.

- Is easily discoverable like the floating button, but not as loud the entire time.

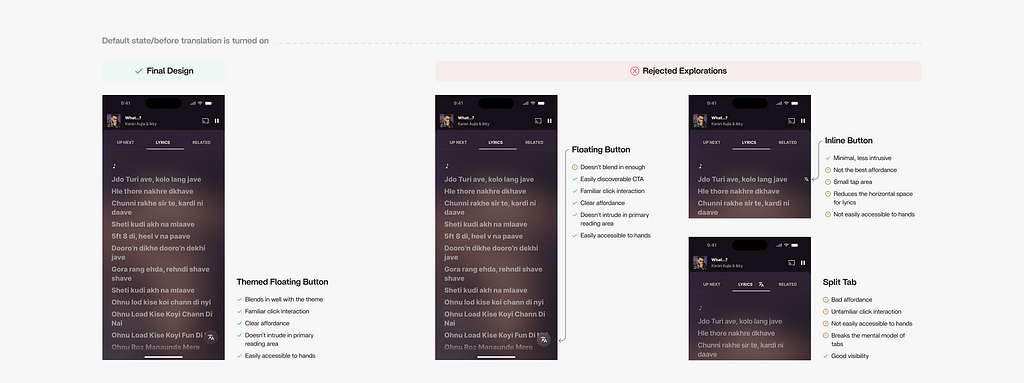

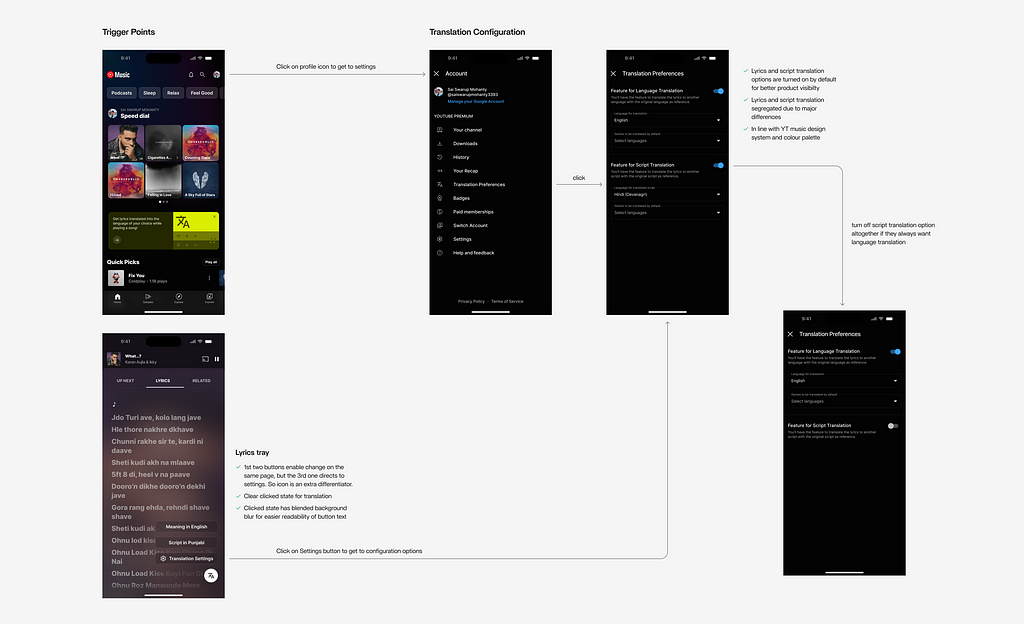

Trigger for Translation

I went down a spiral with explorations, but the A/B testing helped give me a solid direction to pursue.

Translation Experience

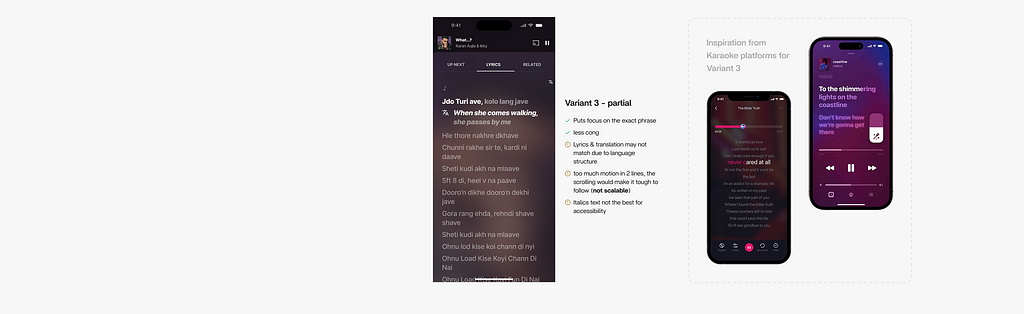

You’ll see a few of the many iterations and why the final design is MY CHOICE here.

Italics text wasn’t used in the final design due to its accessibility concerns. None of the users in the A/B testing had visibility impairment of any kind, but it’s not the best choice at scale.

Configuration in Settings

Translation Language — English by default (Assuming most YT music players understand it, or would know to change it in settings)

Translation Script — Script of the most listened song genre by default (ex: Hindi, Latin). I’m assuming that users who want to read the script would be hardcore listeners/linguists.

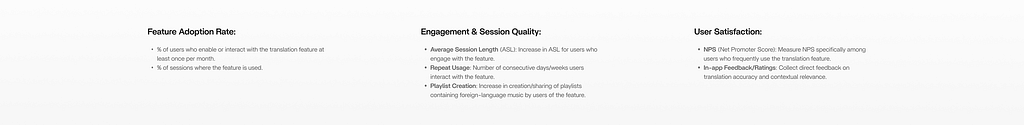

Product KPIs

Here are some key KPIs for me:

Some Edge Cases

Here’s when translation wouldn’t be available:

- No lyrics are found (Youtube Music says this directly on the product today)

- Lines exceed a reasonable character limit (It’ll break the experience completely — Needs more research and data to determine character limit and impact of this decision)

- Poor translation quality from upstream data (Needs definition of what is poor quality, and when/how to determine it)

What’s Next?

If I were working on this product, I would:

- Evaluate if the feature should be part of the Premium plan, based on its usage metrics.

- Build a deeper language preference system

- Support crowd-sourced lyrics improvement (future vision)

- Work with cross-functional stakeholders to define additional edge cases

Major Learnings

- With AI as a companion, brainstorming and critiquing are quicker but it doesn’t replace user research. Testing with users has proved invaluable in this project.

- Learnings from research aren’t always straightforward. Deriving the right insights is just as important.

I’m super grateful to my mentors from ADPList and my close friends for helping me out with design feedback when I needed it the most.

_________________________________________________________________

If you liked this project, don’t shy away from rewarding me with some claps! 👏👏👏

What If… YouTube Music Translated Lyrics in Real Time? was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.