This article explores a transformative user experience paradigm: Spatial Memory Triggers. Moving beyond traditional app-centric interfaces.

With the announcement of the Apple Vision Pro release date this week, I thought I would demonstrate some insights I had for the future of HCI (human-computer interface) within this device.

The Past

My previous article on the possibilities of Apple glasses was focused on user adoption and social privacy. This article will be based on how Spatial computers (VR/AR) could implement unique concepts for users to navigate through tasks, communications and entertainment more intuitively. The smartphone Is king when it comes to intuitive user experience, we use our fingers mostly thumbs to navigate and augment our way through the digital world.

Ai Hardware

One of the issues with these new AI hardware products is the disregard for the user experience. Relying on voice commands to interact with a product in public not only brings up privacy concerns, but frustrated adjustment with error. None of these demos were conducted in reasonably loud environments. Still early.

The development of devices that will enhance the intuitiveness of the smartphone should be the goal.

So what are my insights?

• Physical object Memory Association

• Information retrieved from the previous location

.Object Memory Association

After listening to reviews, observing demos and the limited battery life, it’s clear Apple Pro will be used indoors.

Therefore, Apple Vision has a unique opportunity to give its users better access to their tasks, productivity and content even better than the mighty iPhone.

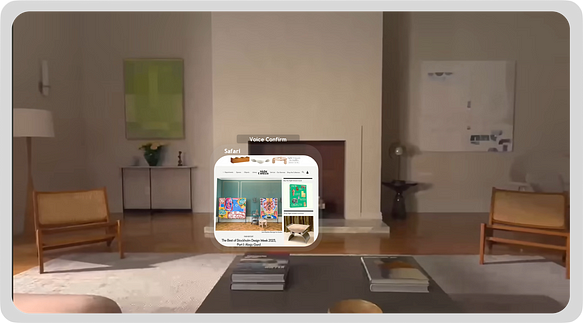

How does it work? Users will be able to associate digital tasks/apps with physical objects within their subjective environment just by looking at them and confirming.

For example, within one’s living room, users will be able to associate a particular frame on their wall with a song genre or family groupchat.

- Open up the iOS note app by looking at real paperwork within the environment.

- Looking at real medicine will open up NHS quick enquiry.

- Looking at the lamp at night will open up meditation calming apps and alarm settings.

- The clock on the wall will navigate you to your calendar.

- Associate a yoga mat with a fitness app, triggering personalized workout routines.

- Linking a water bottle to a hydration tracking app, reminding users to drink water.

- Seeing your cat once in a while will show you a random photo from 2015

- Turning on the coffee machine during a particular time of day will activate the Apple Music radio.

- The books on the bookshelf will open up the latest news.

Instead of only starting here…

Users could start here.

Look at a physical object within their environment that they have associated a digital task with. Physical book & Safari.

Perhaps there will be different modes and time of day sensitive.

Then use their voice to confirm or deny the full experience with a one-word gesture.

Not only taking the user to Safari but also a specific tab and subject based on the user’s association.

Example #2 with Airpods

Resume a song or do something unrelated the user has set up. For example, resume a TV show when looking at your AirPods.

A new symbiotic relationship. leaving old memories and songs with old items.

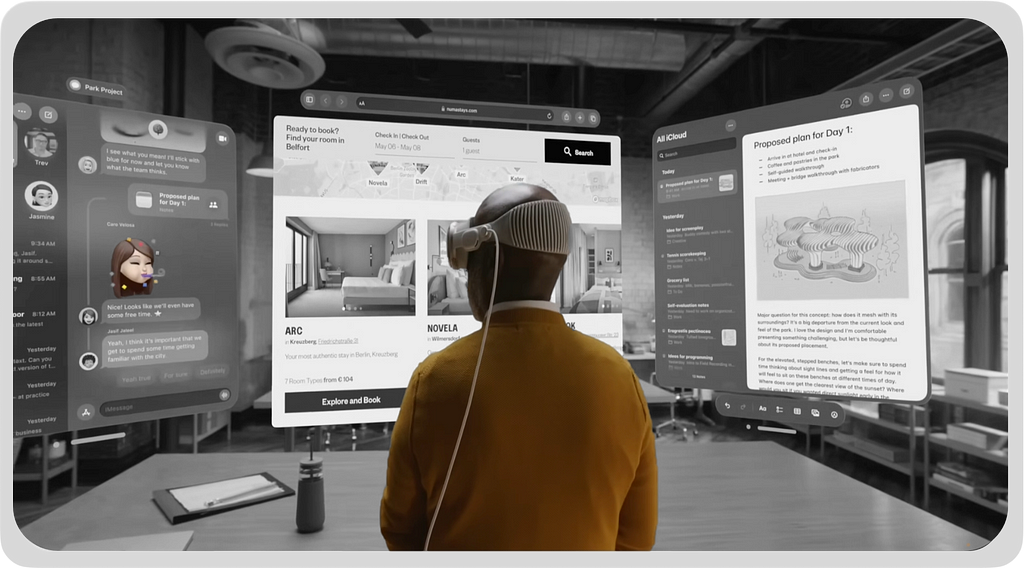

Possibilities below. Obviously not all apps will be shown at once unless the user is changing a physical object’s association with another digital application. The simplicity of UI aesthetics creates cognitive approval by not overwhelming the user.

The premise and analogy here is using generic real-world objects within your environment as Safeboxes. Not only to store your tasks and digital assets but to associate them with physical things. This is how our memory works.

Relaxation content comes on after looking at a particular angry person. Or Viking music plays to match their energy at your own peril.

The future should be simple, but more.

Inspired by the functionality and phenomenon of memory association. Looking at a photo frame of your family could launch a WhatsApp convo with them. This creates a more intuitive and context-aware way to manage communication. glancing at your desk lamp instantly resumes your unfinished work session from earlier that day or a different part of that day. This saves time and mental effort compared to searching through menus.

Objects like chairs, tables and doors which are objectively within almost all indoor environments makes the future of this concept explorable within the next few years.

. Information retrievability from the last location

This works a little differently by retrieving the precise last-used task or app. For example, in the morning you sit at your kitchen table, the vision pro will recognise this exact position and ask if you want to retrieve the exact last 3 tasks you were doing last time you were seated here. A particular website, a particular PowerPoint slide, a song, etc.

The user at another location, sitting on top of the stairs may reopen that unfinished New Year resolution list on Apple Note. Unpause a YouTube video from 2 nights ago, or open up the last person you communicated with.

These concepts are a form of iPhone App switchers enhanced with the Vison Pro experience. Recreating your collective reality to retrigger memory in the form of digital content. Along with an easier way to navigate using real objects and your environment.

Returning to a specific spot in your house could trigger reminders about past events or information associated with that location. This could help learn new skills or recall details from previous activities.

For creativity and inspiration, we all have digital folders on apps where we save inspiring content but rarely revisit it. (When last did you check your Twitter bookmark or Instagram saved photos) Brainstorming becomes interactive when you can now associate objects within your environment creating different digital inspirations. (Maybe a Creative Mode switch or before 8am, after 8pm content mode.) Those animated UI designs from 2 years ago you wanted to replicate are still stored within the plants downstairs.

A moment of serendipity and going back in time. Your environment becomes a playground for your creativity, fueled by the wonders of its objects. If the physical objects are gone taking its memories, you could check saved associations. The opportunity to share these hidden moments with others within your environment who wants to try your Vision Pro. (privacy setting adjustment etc etc.)

Getting to see the familiar Apple icon menu in such new dimensions with Vision Pro must be nothing but incredible. However, the introduction of new devices should always enhance the user’s daily experience.

From a user’s perspective, these collections of moments and memories would gradually be created. With Vision Pro user’s productivity is augmented and the use case is clear. However, onboarding on how to do this has to be well-tailored.

The psychological fatigue of putting on a device is less of a burden with eye tracking and no controller. However, easy access to desired tasks can benefit from these insights. The positive impact this could have during the early stages of dementia, or improved accessibility for those with disabilities. Your environment becomes an extension of your digital life, creating a more seamless and integrated experience.

With AR technology and its massive potential, it’s about how the digital domain overlays and interacts with the physical environment. Objects are used to create a delightful visual experience beyond the ordinary.

The concepts within this article focus on how the everyday physical objects within your home enhance your digital experience by creating seamless integration and leveraging the environment to trigger quick action information retrieval. Spatial Memory Triggers I call it.

Movability creates retrievability. Retrievability creates movability. Could it impact human behaviour?

From a product development perspective, the fun part is bringing this to fruition and solving mass problems. Technical limitations, privacy concerns, user adoption, etc..left to other minds.

Apple Vision Pro’s Untapped Potential for Spatial Reality with AI was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.