PRACTICAL DESIGN INNOVATION

Language for evaluating Generative AI experiences

Where Generative AI UX Design Patterns explores what can be built, this article explores ways to evaluate what has been built.

Generative AI UX Design Patterns

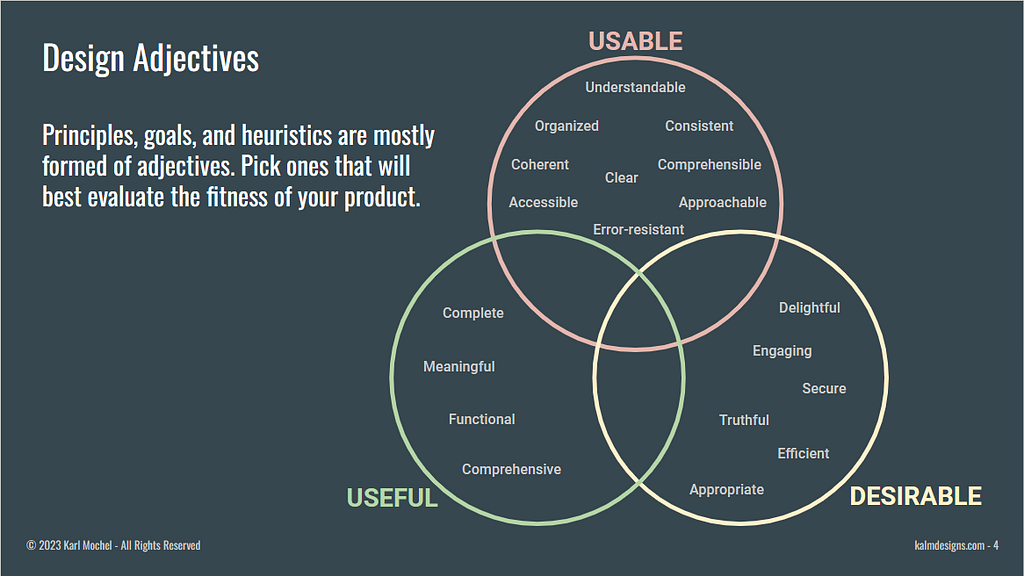

This article expands my Design Adjectives Medium article by adding adjectives to assess Generative AI experiences.

If you haven’t read it, I highly suggest reading Design Adjectives first…

The new adjectives

New adjectives are needed because the black-box nature of genAI cognition and emerging agentic behaviors will create experiences where the user’s relationship with systems will radically change. The following adjectives are meant to facilitate conversations about users’ relationships with emerging genAI systems…hopefully at least wandering towards better experiences for users.

Discernible — User understands how Generative AI is applied to experience.

GenAI can be applied to experiences in ways that are not obvious, apparent, or discernible. Discernible asks us to question whether the user knows, wants to know, or should know whether AI is being used in an experience.

- How much do users care about how much genAI is used in an experience?

- How do users know how much genAI is used in an experience?

- What should user’s rights be to know how genAI is used in an experience?

Agency — User is empowered to control their experience.

Before genAI, users’ agency was governed by the logic of the application. With genAI becoming more of an intermediary and used to create agents, user agency is under ever greater and very different tension.

- Does the user understand how AI is participating?

- Does the user have any control over how the AI participates?

- Does the user need to override the AI?

- Does the user have an opportunity to provide feedback?

- Does the AI limit the user from achieving cognitive or artistic goals?

Controllable — User is able to affect the specific aspects of work they desire.

Moving from understanding the ability to act or not, to when acting what is the user’s ability to control the activity?

GenAI is good at producing something but often bad at producing results that match specific criteria. For example, I can give Midjourney a prompt and get a picture back that is interesting, but if I want specific attributes — like a planet covered with a labyrinth (my go-to test) — it has yet to create anything approaching what I want. Adobe tools allow me to control where it places genAI results, but what gets created is still controlled by the cognitive distance between the prompt and the system’s ability to “understand” what the user actually desires.

- What does Controllable mean in conversational experiences?

- What does Controllable mean in experiences with multiple modalities of input and output?

Can the user ever adequately control an experience to meet their specific desires via the given modalities? Does a prompt provide enough control to get a specific scene from Midjourney? Can a driver control a car appropriately? Is Tony Stark in control of Jarvis?

Understanding — The experience understands what the user needs or desires.

One of the heuristics in my original article was Understandable — the experience is logical and consistent. With AI we want to look at Understanding — whether the experience understands what is needed or desired.

As genAI becomes more pervasive and capable, we will desire its understanding of us, our history, our context, our needs, and our desires.

- How does the experience acquire context that will provide the desired results?

- How proactively should the system prompt for context?

- How much do we want the system to understand us?

- If letting the system know us intimately actually enables it be a good helper or companion, should we/do we want to provide data and information?

GenAI’s ability to understand what we desire from minimal prompting is and will be key. Our interest in giving access to our context, so that genAI understands us will increase with the tool’s abilities to use that context effectively.

Transparency — User is able to introspect into how and why results are generated.

While it may be possible to provide transparency into specific AI activities, it isn’t likely that the tooling will be instantaneous or comprehendible by laypeople.

When users receive unexpected results in chat experiences like ChatGPT and Midjourney, their only recourse is to try to change the prompt or provide feedback to the model builders.

It is understandable that different circumstances engender different levels and modes of transparency. When a legal team asks for a summary of cases, what kind of transparency should they expect? The system probably falls under the company’s control, so they might expect high transparency. When a car does something unexpected, what transparency does the user desire? Transparency in the moment would be problematic.

Creating transparency into how the system transformed billions of parameters into the results seems impossible, but it will be required for professionals and desired by laypeople…sometimes.

Creating ways to indicate the provenance of data and how it was weighed, evaluated, and structured for the results can help users understand how to interact with the system better and enhance trust…assuming the system accomplished the activities in a way the user finds understandable.

- Is there an impact if the user does not know why the system responded incorrectly, inappropriately, or problematically?

- What information would be needed for this experience to be transparent?

- Would transparency help the user trust the experience?

- Would transparency be valuable to creating a better experience?

Also, consider that “Transparency” is currently only available to the company developing the experience, as the data has technical and intellectual property implications. What are a user’s rights to transparency?

Trustworthy — User can trust that information created and actions taken on their behalf do not contradict good practice, beliefs, or existence.

- Can the user trust the AI?

- Can society trust AIs?

- What might the system do that the user finds untrustworthy?

- What does the experience provide to help the user trust it?

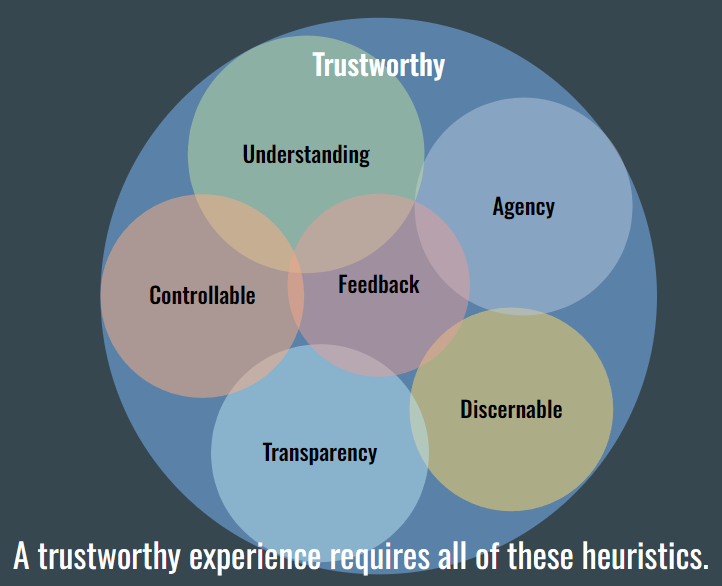

With overlaps with other heuristics mentioned, the following Deloitte Trustworthy diagram provides additional language applicable to system-level trustworthiness, like adaptable, consensual, and inclusive.

Feedback — User has appropriate methods for telling the system it is doing something unexpected or problematic.

While feedback at every step, from conception to creation through use by users and independent parties, is important for building, deploying, and using genAI tools, we’re talking about the user’s ability to provide feedback.

Feedback is more difficult to provide in a conversational or agent-driven experience. There are more things to provide feedback on than with a logic-based system, such as transparency, actionability, appropriateness, cognition, etc.

- Is feedback possible when the user encounters unexpected results?

- Would the ability to provide feedback be valuable to creating a better experience?

- Should the user know that feedback was taken and acted upon?

- What feedback is possible and meaningful as complex cognitive abilities such as attention, abstraction, and reasoning develop?

It feels important to acknowledge an anti-heuristic…

Darkness — The ability to subversively affect a population.

Existing dark designs like un-completable unsubscribe flows and doom scrolling require specific participation from the user. Existing system’s agendas evolve from how they are managed — Facebook, X, Truth Social. AI-based systems can be built to create content to maintain an agenda. AI expands the ability to create subtler mediated interactions users may not know they are participating in. GenAI makes creating inimical experiences easier because of how much it speeds up evaluating, individualizing, and creating content to meet agendas and drive end-user biases, desires, and motivations.

GenAI makes it easier to create experiences…

- To control who can say what to whom.

- That controls whether or not certain ideas are allowed to propagate.

- That enforces or empowers biases, whether inherent or manufactured.

- Using misinformation, disinformation, and fakes.

An inverse of darkness doesn’t seem to capture the mindspace that should be considered — so it seems important to keep this aspect in mind instead of providing a positive heuristic.

Conclusion

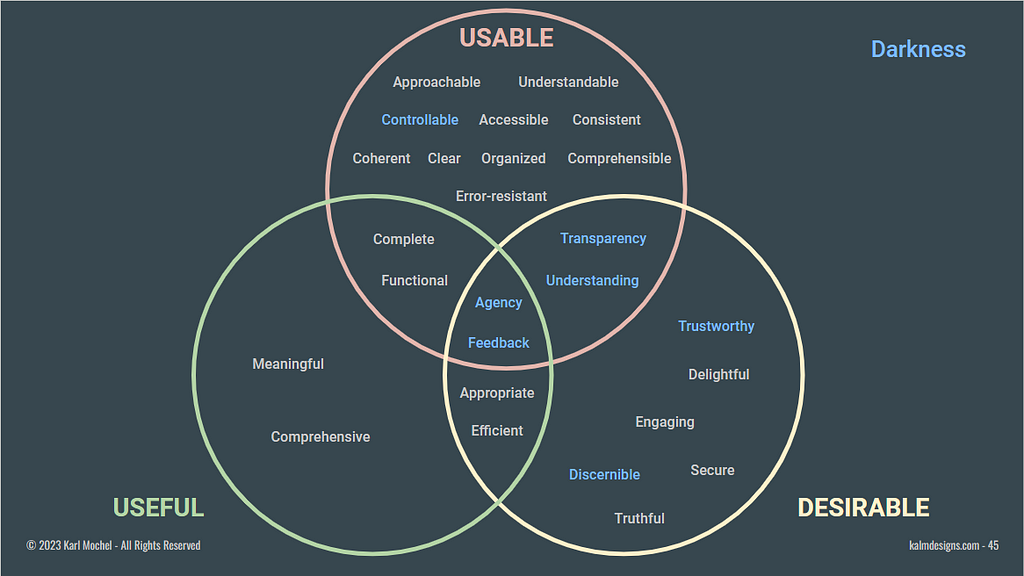

We return to the diagram with some additions.

Some of the new heuristics fit into multiple criteria for designing user experiences, while others might be better suited to multiple criteria.

It’s a work in progress…

Generative AI UX Design Adjectives was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.