Insights on how the AI hype feeds on good UX and what to expect next.

Companies like Google have been committed to AI for decades, with active contributions to science and investing in talent that brought the area to the level we know it. This article uses Google as an example, but the same logic applies to others like Meta, Apple and Amazon.

Chat GPT is a well-executed version of a technology that Google started in 2017. During the IO of 2021, Google demoed LaMDA for the first time and kept it as an internal project that is not openly accessible to the public.

How did ChatGPT add an extra sparkle at the end of 2022?

There are different chatbot technologies, and my current favourites are Anthropic’s Claude and Google’s Gemini. For a moment, I’ll dedicate this section to OpenAI’s ChatGPT since it popularised the idea of Generative AI chatbots and has become a generic brand name for AI to many.

With its exceptional execution from a user experience (UX) perspective, ChatGPT captured the imagination and anthropomorphized artificial intelligence (AI) in the minds of many. Through a single and straightforward chat interface, ChatGPT empowers users to “query” anything and get an aggregated result of whatever they ask.

The output includes text, essays, code, scripts, lesson plans, and lyrics. The output is outstanding because the chatbot is doing its job, looking confident and exceptional. Being accurate, factual or correct is another matter. The thought leader in UX design, Don Norman, once stated that “Beautiful things work better“, and ChatGPT is another realisation of this idea. The flow of the dialogue and the way it fulfils queries are outstanding, and it is, until now, the best interface we have witnessed for a Large Language Model (LLM). As expected, this eventually evolved into a Large Multimodal Model (LMM), which is a system that can receive input in different modalities (images, video, audio, code, etc.) and return output in such modalities.

I wear two hats: one as a casual user/developer/entrepreneur and the other as an AI academic.

As a user, I give ChatGPT 9/10 for its usability. This model is only a few clicks away for any user, and as long as it is available, everyone can try it out and witness its power. We saw a handful of such neatly executed experiences as users in the past two decades.

On the other hand, from an AI perspective, ChatGPT is an AI system that blissfully hides its limitations and, above all, convinces us it’s right even when it is not. Whenever machine learning (ML) models are used to generate an output, the same output is generally accompanied by a level of accuracy. In this case, and until now, not only do chatbots not give a level of confidence, but they also phrase the output very confidently.

In other words, this technology is an excellent brainstorming assistant and can help us analyse information in ways humanity never experienced before. It is also important to appreciate what it is and what is not. Since we need to verify the facts that they return, it is not yet a replacement for search engines. They should complement search engines.

How was Google dragged into the debate?

Countless articles and opinions suggested that ChatGPT by OpenAI will be a real threat to Google. There are different ways of looking at this reaction. This could be triggered by the fact that Google has been leading a substantial part of the innovation in AI for the past decade due to its massive user base and never-ending range of products.

It could also be out of sheer entertainment of seeing a new gladiator with potential stepping into the arena against the search titan. This was fueled by Microsoft’s investment in OpenAI and indications that it would integrate this technology into its software suite. Microsoft has always tried to compete against Google in the search space through MSN, Explorer/Edge and Bing. None of these appears to have made significant dents. However, the hype around ChatGPT has reignited interest in this race.

Google spent the first months of 2023 in relative silence and did not engage in the “ChatGPT debate”. With an AI event in February 2023, we could notice the first reactions, followed by Bard and eventually wrapping up 2023 with Gemini. The year 2024 was a consolation of Gemini and its byproducts, such as NotebookLM, and wrapped up 2024 with Gemini 2.0, a glimpse of the barrage of products and enhancements we will witness in 2025.

Google’s Gradual Rollout Strategy

Let’s not forget that Google is one of the current leaders in AI with significant responsibility, like other leaders such as Meta. We trust Google with a considerable chunk of our lives: email, calendar, navigation, storage, phone operating system and more. Google wouldn’t have wanted to risk the trust in its brand for a chat app, at least not before it was sure to get it right. This was also confirmed when their first product was named Bard, and when they were sure it was fine, they rebranded it to Gemini. Gemini was their favourite brand name: it starts with a G and Gemini as your AI twin.

All experts, including those at OpenAI, know about the limitations that linger behind the fascination of LLMs. Reading between the lines, one can also notice that Microsoft is carefully and gently associating itself with ChatGPT and not making any claims about its current performance or use. It is a matter of commercial speculation of future potential.

What did Google bring to the current debate at the AI table?

Short answer: plenty.

Like other major companies, Google has been committed to AI for decades. But how? It publishes its findings and releases technology to make AI accessible, open-sourced frameworks that enable AI, and supports communities and developers to make sure AI makes a positive impact. Below is how it does this.

Publications

From an academic perspective, Google has been very open about its efforts in AI. Among its numerous published papers, it introduced key contributions to modern AI that lead us to this generative AI conversation.

According to Google Research’s publications database, the company published 10,192 academic papers and another 180 by Google’s Deepmind at the time of writing this article. This is an impressive figure, particularly when one considers that publishing as a private company provides transparency that exposes risks in a commercial context. On the other hand, it draws admiration and underlines the calibre of the scientists behind its innovation.

Out of these 10K+ publications, I picked a few personal favourites that I feel were an exceptional contribution to AI over the recent years:

- Sequence to Sequence Learning with Neural Networks (2014) seq2seq paper pioneered using two RNNs to process variable-length sequences. This revolutionised machine translation and laid the groundwork for text summarisation and speech recognition tasks. This paper (together with GANs mentioned below) was awarded the NeurIPS test of time award in 2024 for its relevance and impact.

- “Going Deeper With Convolutions” (2015), This paper introduced the “Inception” (v1) architecture, a key innovation in CNN design. Inception networks use multiple filter sizes in parallel, allowing them to capture features at different scales within an image. This significantly improved image recognition accuracy and efficiency and formed the basis for many later Google models.

- Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift (2015) This paper tackled a significant problem in training deep neural networks: internal covariate shift. Batch Normalization provided a solution, significantly speeding up training and improving stability. It’s now a standard technique used in almost all deep learning models, not just CNNs.

- “Xception: Deep Learning With Depthwise Separable Convolutions” (2017) This paper explored a new type of convolution called “depthwise separable convolution”. This approach significantly reduces the number of parameters and computations needed, making CNNs more efficient

- “Attention is all you need” (2017), aka Transformers Paper, is the foundation of technology like ChatGPT.

- “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” (2018) Demonstrated the potential of transformer technology and improved how AI understands the meaning behind words, leading to better search results, translation, and more.

- “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”(2019) introduced the compound scaling method in CNN and has become a go-to architecture for many computer vision tasks. Its influence can be seen in various applications.

- “Highly accurate protein structure prediction with AlphaFold” (2021) The paper for Deepmind’s AlphaFold. This predicts protein shapes with stunning accuracy. This has massive implications for drug discovery and disease understanding and is used by thousands of researchers worldwide. The team behind this was awarded the 2024 Nobel Prize for Chemistry.

There are other landmark publications whose authors were not directly affiliated with Google at the time of publication but were soon after part of the company and building upon their original work. One of the most notable cases is Ian Goodfellow’s work on Generative Adversarial Networks (GANs). His GANs original paper was published when he was still a student at the University of Montreal. Still, he joined Google Brain immediately afterwards, where he continued publishing further work related to GANs. The work associated with GANs was the first in recent history to trigger the imagination of what AI can “create” and paved the way to where we are now and still shed light on tomorrow.

John Nay on Twitter: “AI research output from prolific institutions:- Google- Microsoft- Stanford- Meta- Amazon- DeepMind- OpenAI pic.twitter.com/Z28TP87VY4 / Twitter”

AI research output from prolific institutions:- Google- Microsoft- Stanford- Meta- Amazon- DeepMind- OpenAI pic.twitter.com/Z28TP87VY4

Google is not the only big company that does this. Meta also attracts high-quality research talent, and under the direction of LeCun, it keeps pushing the current boundaries of AI. However, Meta also carries a similar responsibility to Google’s, and any revolutionary technique or technology would need to be green-lit before publication or dissemination. The same applies to other tech giants, namely Amazon and Microsoft, who do the same.

Case in point, The Computer Vision Foundation makes the publications of the leading CV conferences open access. Amazon, Microsoft, Google and Facebook sponsor this significant expense. This initiative allows millions of students, academics and researchers worldwide to access the information published at these conferences.

Other companies play a different game.

Cloud Technology

Google was not the first mover in cloud technology as we know it today. Amazon’s AWS and Microsoft’s Azure revolutionised the space. Google quickly followed and invested heavily in this scalable platform that perfectly fits its AI ecosystem. It provided the infrastructural platform for AI technology to reach users worldwide.

The development of the Tensor Processing Unit (TPU) at Google also facilitated faster training of neural networks, and this was mainly served through its cloud services before being shipped as an IOT off-the-shelf solution. A quick way to access it is through Google Cloud.

Another important cloud-based technology through which Google facilitates and democratises AI is the Google Colaboratory Project, aka Colab. This is a cloud-based deployment of Jupyter Notebooks, an interactive environment for running Python code and quickly prototyping a wide range of ML projects.

Google’s Colab allows everyone to rapidly run Python code on the cloud without needing configuration while having access to GPUs and TPUs. From an educational and community development perspective, Colabs are also excellent because they are easy to share and facilitate collaboration, hence its name.

The Gemini and Gemma (open-source version) are widely available for free on Google’s AI Studio. Over the past months, this product saw significant developments and uplifts. It is intended for developers to build using Gemini, and it’s also an excellent product that anyone can use.

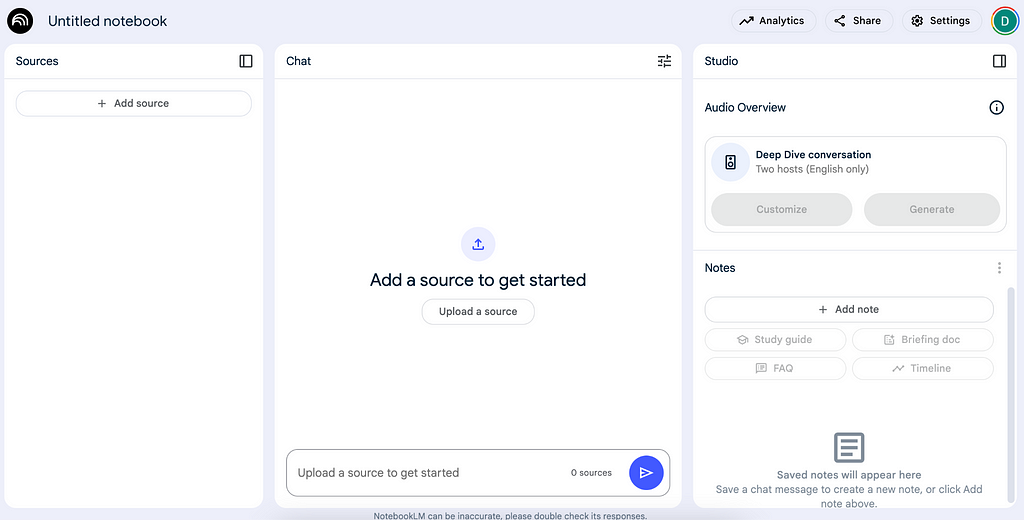

The recent supertool gaining popularity is NotebookLM. It was initially announced as Project Tailwind in the 2023 Google IO and launched as NotebookLM later that year. Its killer feature was the artificial podcast functionality, which offers a deep dive into the sources you add to the notebook. By the end of 2024, it had received a significant update to its UI and improved Audio Overview capability that allowed the user to join the conversation using voice. NotebookLM shifted the perspective from a chat interface to one that focuses on sources and has the potential to reshape education, research, and a myriad of other industries.

When working on production-level cloud solutions, the Google Cloud Platform offers a wide range of AI solutions that provide the required robustness when needed.

Tensorflow

TensorFlow is an open-source project by Google that provides a free machine-learning library/framework. TensorFlow has come a long way since its initial release in 2015. Since it was combined with the Keras library in version 2.x, TensorFlow has become easier to use and abstracts low-level ML concepts that might not be necessary when solving real-world problems.

Google’s main contribution to AI with Tensorflow was that this framework made AI accessible to the dev community. PyTorch is an equally relevant and powerful framework released one year later by Meta and is now a project of The Linux Foundation.

The presence of two powerful and relevant frameworks democratised further the application of ML and AI, with significant projects being built through them. This ‘competition’ kept these two frameworks developing, and there is a lot that the academic and dev community can build with these tools.

Community

Gently rooted in communities around us, one finds passionate developer communities building together a better tomorrow. I have been part of this community since 2015 (I can’t believe it’s already been 10 years!) and have witnessed first-hand the passion and dedication of those willing to share and support others.

Google invested heavily in this important element of the AI ecosystem. Through its Google Developers programme, Google provides free resources for these communities to use and empower other developers to build tomorrow’s technology at a community level.

These communities tackle different topics and technology. One of these topics is AI. If you do not feel like participating in a community just yet, there are plenty of free resources you can learn from on the Google Developers Machine Learning website.

There is a more fun version of this through communities! The Google Developer Groups bring developers together to share knowledge and exchange experience. It is also a platform for developing meaningful solutions for communities at large.

What can we expect?

The AI race is on, and Google is one of the top players. Over the past year, they released these, and their blog outlines them neatly. At this stage, the industry’s focus is on developing better products. The scientific potential of Generative AI has now been tried and tested. In the short term, there will probably be more of a leap on the product side than the underlying technology. Much work must be done on the UX of AI and interaction design.

For now, better models depend on computational resources. But that might not be for long…just a few days before the end of 2024, DeepSeek was released by a Chinese team. This open weights network is performing on par with the current top products and uses much fewer resources for training.

2025 promises to be another year when powerful technology will be released. The opportunities for reshaping social constructs and building a better tomorrow will be substantial. Big tech is doing the heavy lifting and it is up to us to build products and research that make a difference.

If you are interested in AI, I’m listing a few of my articles that you may find relevant in the current context of how AI is shaping our world:

- A starter guide in AI that I wrote back in 2018, but most principles are still relevant

- Suggestions and insights on how to deal with the hype around AI

- The opportunity in AI that Europe is not yet taking

- My perspective on how AI is reshaping education

I am a lecturer in the Department of AI at the University of Malta and part of the Google Developers Group community. Within the GDG communities, we aim to facilitate the use of technology in our community without receiving any financial incentives. The views presented in this article are my own.

Google is ‘back’, and why is that not a surprise? was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.